Foundation models are gaining traction in radiology, offering new ways to automate reporting, improve quality, and integrate patient context. This primer offers a clear overview of how these models work, how they’re adapted for clinical use, and where they fit into the modern radiology workflow.

What Are Foundation Models?

Foundation models are large-scale AI systems trained on vast and diverse datasets—often consisting of text, images, or both. Originally designed to solve broad tasks such as language translation, summarization, and reasoning, these models are now being adapted to support domain-specific tasks across fields like law, finance, and medicine.

In radiology, foundation models offer new opportunities to streamline and augment workflows by generating, reviewing, and contextualizing clinical information. But to be clinically useful, these models must be carefully adapted to reflect the precision, sensitivity, and reliability required in healthcare.

General vs. Healthcare-Specific Foundation Models

There are two broad categories of foundation models relevant to radiology:

General-Purpose Models

These models are trained on public internet data and designed for broad reasoning and dialogue tasks. They offer high flexibility but can hallucinate facts or misinterpret clinical nuances.

Examples include:

- GPT 4.5 Preview (OpenAI)

- Claude 3 (Anthropic)

- Gemini 2.0 (Google)

- Grok-3 (xAI)

- Mistral and Mixtral (Mistral AI)

- LLaMA 3 (Meta)

Healthcare-Specific Models

These models are trained or fine-tuned on medical datasets such as research articles, textbooks, radiology reports, and electronic health records (EHRs). They are more accurate in clinical contexts but are significantly more resource-intensive to build and maintain.

Examples include:

- Med-Gemini (Google DeepMind): Multimodal version of Med-PaLM with imaging support

- Rad1 (Harrison.ai): Radiology foundation model for X-ray

- MAIRA 2 (Microsoft Research): Multimodal RAG model for reasoning, QA, and EHR summarization

- GatorTron (UF Health + NVIDIA): Trained on >90B tokens from EHRs

- Watsonx.ai for Health (IBM): Focused on clinical summarization and extraction

- Key tradeoff: General models are accessible and flexible but require guardrails; healthcare-specific models are accurate but demand clinical data, regulatory compliance, and significant compute resources.

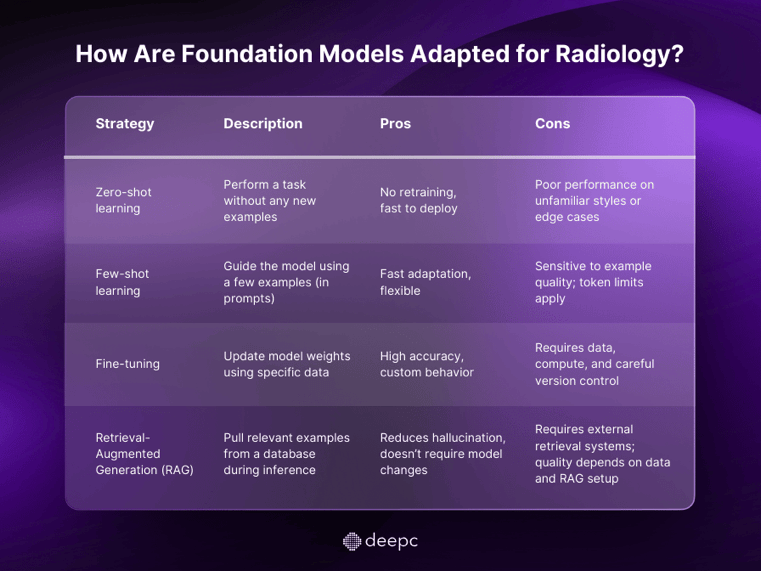

How Are Foundation Models Adapted for Radiology?

Not all foundation models are created equal—or used the same way. Adapting a model for radiology requires balancing accuracy, speed, and clinical alignment. There are four common adaptation strategies:

These strategies are not mutually exclusive—many healthcare AI systems combine them to balance efficiency and precision.

What Are Foundation Models Doing in Radiology Today?

Radiology offers a rich environment for foundation models due to its mix of structured images, standardized reporting, and EHR data. Today, applications span both text and image domains.

Text Applications

- Impression generation: Drafting radiology impressions based on dictated findings.

- Quality assurance (QA): Reviewing completed reports for inconsistencies or missed findings.

- Contextualization: Pulling relevant information from the patient history to support image interpretation.

Image Applications

- Report generation: Drafting a full radiology report based on image interpretation.

- Retrieval: Finding similar prior cases to support comparative analysis or decision-making.

These systems can integrate multiple data sources—patient history, lab results, prior imaging—to generate and verify draft reports, flag missing findings, and improve radiologist efficiency.

Where Do These Models Fit in the Radiology Workflow?

Foundation models are not just another add-on. They have the potential to integrate across the radiology workflow, supporting decision-making at multiple stages:

📌 Prediction: Triage or recommend protocols before the exam

📌 Detection: Identify abnormalities in given image, text or tabular data

📌 Interpretation: Assist with report drafting and contextual analysis

📌 Reporting & QA: Verify consistency and flag discrepancies

When tightly integrated with the Electronic Health Record (EHR), these models can act as intelligent copilots to reduce cognitive load, speed up reporting, and ultimately enhance care quality.

What’s Next?

As we move toward more advanced foundation models, especially multimodal systems that can reason across both images and text, the potential for automation and augmentation grows.

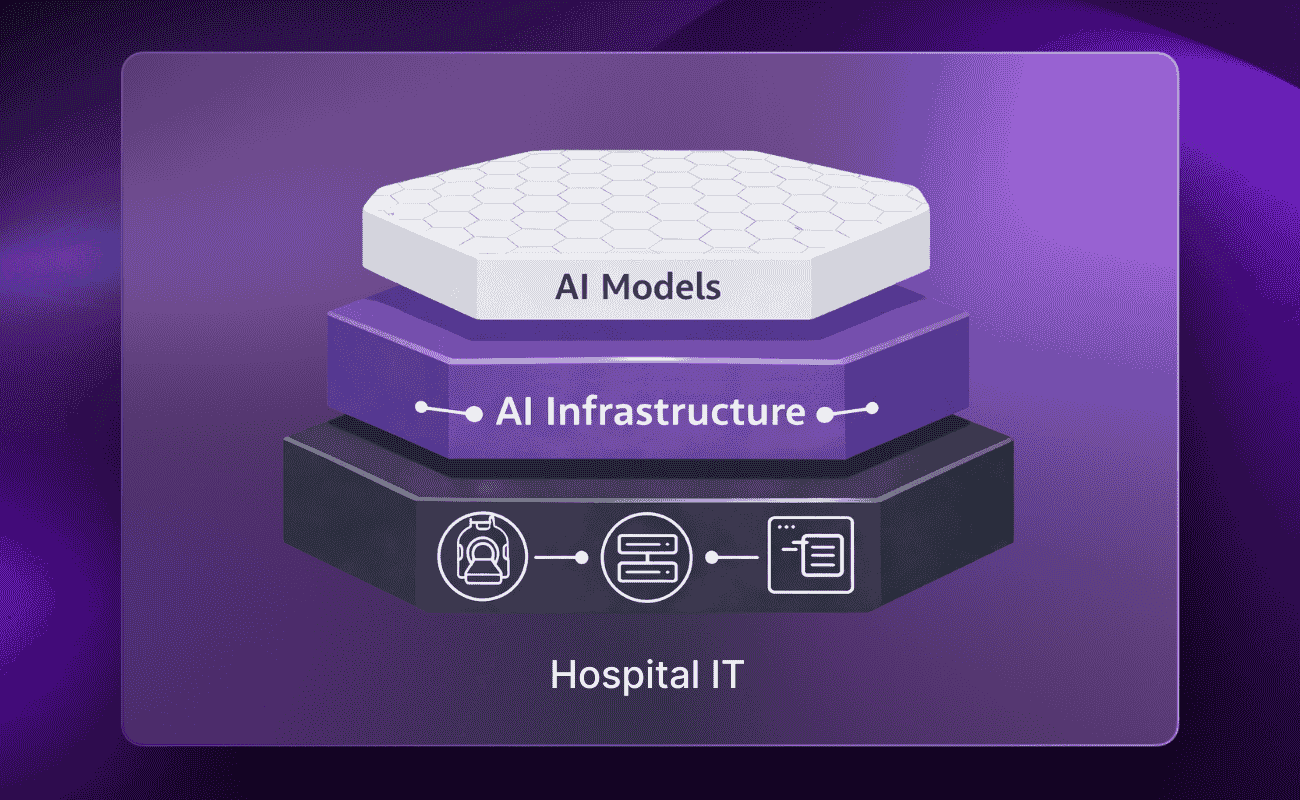

But so do the challenges:

- Data governance and traceability become mission-critical

- Infrastructure must support safe, scalable deployment

- Clinical validation and performance monitoring must evolve alongside the technology

Radiology is at the forefront of this shift. It’s a domain where structured data, high workload, and diagnostic precision intersect, making it an ideal proving ground for foundation model integration.

Conclusion

Foundation models are no longer experimental. They’re already reshaping how radiology is practiced, reported, and reviewed. But true impact depends on more than just powerful models. It requires thoughtful adaptation, domain-specific data, and a reliable infrastructure to support integration, validation, and monitoring.

Understanding these foundational elements isn’t just technical; it’s strategic. As the field advances, those who build and manage the underlying systems will shape the future of AI in healthcare.

.png)

.png)

.png)

.png)