Can radiologists diagnose faster and more accurately through the use of AI tools?

A case study in head computed tomography diagnosis.

There have been significant improvements in artificial intelligence (AI) over the last decade. Advances in computing technology combined with the availability of vast amounts of training data have led to AI applications solving many challenging problems in everyday life. With the ever-increasing number of imaging studies up to a billion/year worldwide [1], radiology is one of the application areas where AI can help relieve the increasing burden on healthcare specialists.

What tangible value can AI solutions bring to radiology?

Although AI solutions have proven to be successful in laboratory environments at large scale, skepticism remains about whether they can be of true, clinical value. Ideally, they are tailored to perform a task that is time-consuming and/or error-prone for a radiologist. Real clinical value can be realized when tedious tasks such as screening data are supported by AI while decision-making remains confided to the human expert.

A real-world study: Are radiologists faster, more accurate, and more confident using an AI-augmented CT head anomaly detection tool?

A recent study on the clinical utility of AI tools was published in the Journal of Diagnostics [1] (disclaimer: I am a co-author). A group of scientists from deepc and the Department of Diagnostic and Interventional Neuroradiology at Klinikum Rechts der Isar of the Technical University of Munich has investigated whether AI-based triage of head CT scans can improve diagnostic accuracy and shorten reporting times. They are also studying whether the effects depend on a radiologist’s experience.

Four radiologists with levels of experience ranging from 1 to 10 years were asked to diagnose a total of 80 cases. 50% of the scans were normal, and the remaining ones presented varying types and degrees of pathological findings. The radiologists used a zero-footprint, web-based DICOM viewer developed and provided by deepc to assess the CT scans both with and without AI augmentation (see Figure 1).

The researchers were particularly interested in understanding the influence of AI assistance on 1) diagnostic accuracy, 2) reporting time, and 3) subjectively assessed diagnostic confidence. To assess the effect, each radiologist was presented with the scans once with AI assistance and once without AI assistance after a washout period of four weeks (see Figure 2). In the case of AI assistance, the participants were additionally presented with a classification of the scan into either normal, pathological, or inconclusive, and a heatmap overlay of anomalous regions’ segmentation (see Figure 1, left). The AI algorithm investigated in the study provided definite labels (e.g. normal or pathological) in 75% of the cases, all of which corresponded to the gold standard annotations, leading to 100% accuracy in definitely labeled cases.

Diagnostic accuracy increases through AI support — particularly for less experienced radiologists

Amongst studies rated as healthy by a radiologist, 94.1% were actually healthy (i.e. negative predictive value; NPV) if no AI support was given to the radiologist. With AI support, the studies falsely classified as healthy (false negatives) have decreased significantly; the NPV increased from 94.1% to 98.2%. Similarly, the positive predictive value (PPV), expressing the reliability of studies classified as anomalous by a radiologist, increased from 99.3% to 100% PPV. Near perfect positive and negative predictive values thus became achievable with AI support. In terms of diagnostic accuracy, the study found that false positive detection was reduced by two thirds when the read was augmented by AI. This outcome becomes particularly interesting in light of the fact that inexperienced radiologists tend to be oversensitive while reading cases [2].

Radiologists are up to 25% faster when using AI

The authors found that the time the radiologists needed to read the exam was reduced by 15.7% if AI support was given. Both experienced and inexperienced radiologists particularly benefited from AI assistance when presented with normal scans. For inexperienced radiologists confronted with normal scans, reporting times have been reduced by 25.7%. This finding is especially important since it gives clear evidence that AI can indeed help in clinical routine settings by serving as a “second read”.

Another question that this study aimed to answer was whether AI assistance has an influence on the subjective diagnostic confidence in reading head CT scans. It was found that the subjectively assessed diagnostic confidence did not vary significantly.

Evaluation of the clinical value of AI tools is crucial — partnerships with trusted experts are key

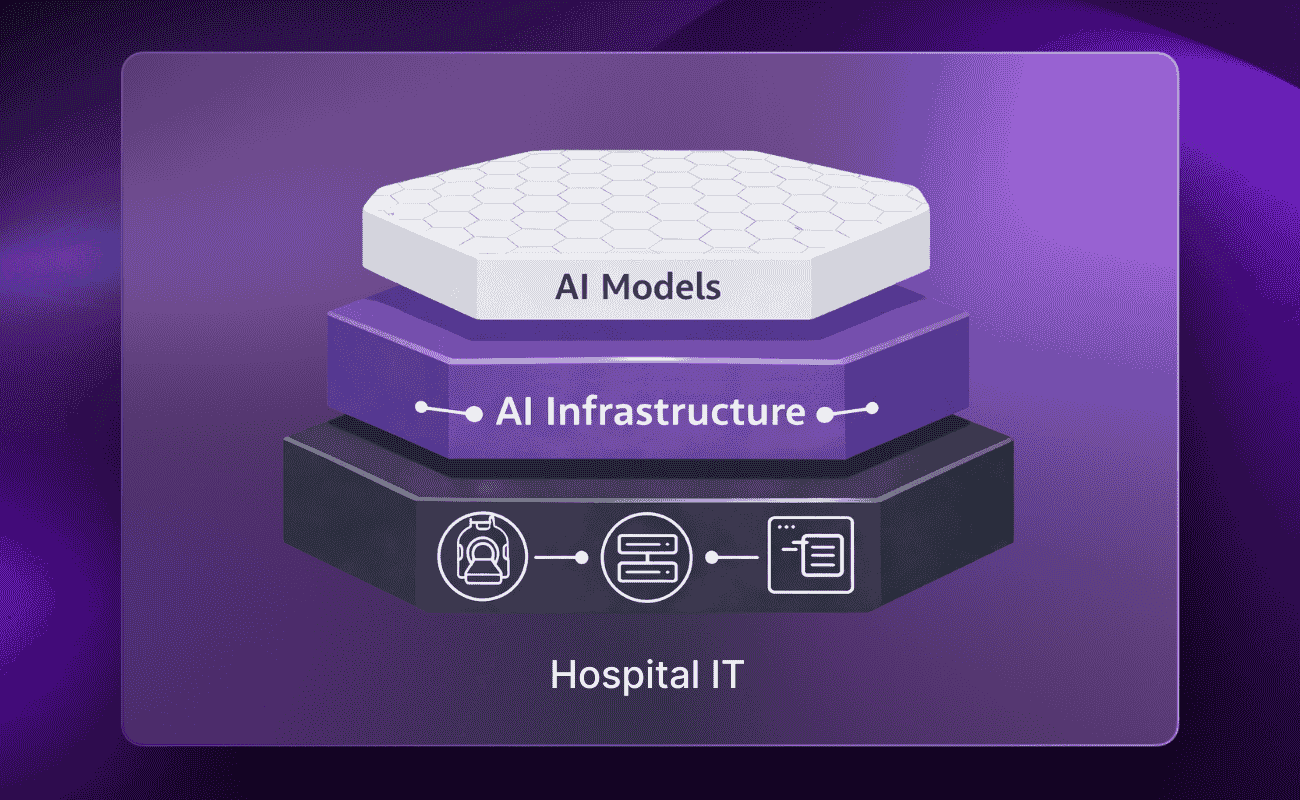

The study presented in the article demonstrates the clinical utility of one AI tool. The findings in this study are a clear message that further integration of AI in radiological clinical routine not only improves the quality of diagnostics but also helps to cope with the problem of increasing cognitive load on the radiology workforce. Investigating the clinical value of AI solutions is a crucial part of every AI strategy, however, also resource intensive. AI Platforms like deepc’s radiology operating system deepcOS support clinical value evaluation as an independent third party to make integrating AI solutions into clinical workflows a seamless and easy process for radiological institutions.

Author:

Mehmet Yigitsoy | Principal AI Engineer | Head of AI Labs at deepc

Reference

- Finck, Tom, Julia Moosbauer, Monika Probst, Sarah Schlaeger, Madeleine Schuberth, David Schinz, Mehmet Yiğitsoy, Sebastian Byas, Claus Zimmer, Franz Pfister, and Benedikt Wiestler. 2022. “Faster and Better: How Anomaly Detection Can Accelerate and Improve Reporting of Head Computed Tomography” Diagnostics 12, no. 2: 452. https://doi.org/10.3390/diagnostics12020452

- Alberdi, R.Z.; Llanes, A.B.; Ortega, R.A.; Expósito, R.R.; Collado, J.M.V.; Verdes, T.Q.; Ramos, C.N.; Sanz, M.E.; Trejo, D.S.; Oliveres, X.C. Effect of radiologist experience on the risk of false-positive results in breast cancer screening programs. Eur. Radiol. 2011, 21, 2083–2090.

- Berlin, L. Radiologic errors, past, present and future. Diagnosis 2014, 1, 79–84

- Smith-Bindman, R.; Kwan, M.L.; Marlow, E.C.; Theis, M.K.; Bolch, W.; Cheng, S.Y.; Bowles, E.J.A.; Duncan, J.R.; Greenlee, R.T.; Kushi, L.H.; et al. Trends in Use of Medical Imaging in US Health Care Systems and in Ontario, Canada, 2000–2016. JAMA 2019, 322, 843–856

.png)

.png)

.png)