2026 Is the Year AI Strategy Becomes Infrastructure Strategy

2026 is when imaging AI stops being “a set of tools” and becomes a strategy decision

.png)

2026 is when imaging AI stops being “a set of tools” and becomes a strategy decision

Radiology AI is entering 2026 with a noticeably different energy than even a year ago. At RSNA 2025, the big shift was not a single breakthrough model or flashy demo. It was the market’s maturity.

Buyers, vendors, and health systems are moving from experimentation to operating models: how AI gets evaluated, deployed, governed, funded, renewed, and expanded over time.

Below, we break down the five shifts that are shaping medical imaging IT and AI, and what they mean for how you should set strategy without locking yourself into yesterday’s assumptions.

Platform strategy will be redefined in 2026

The shift: The conversation is moving from “Which collection of AI models should we buy?” to “What strategy do we need to run AI safely, at scale, across time?”

At RSNA 2025, this shift became harder to ignore. Buyers increasingly recognised that simply choosing AI models was no longer enough to ensure AI would deliver sustained value in practice.

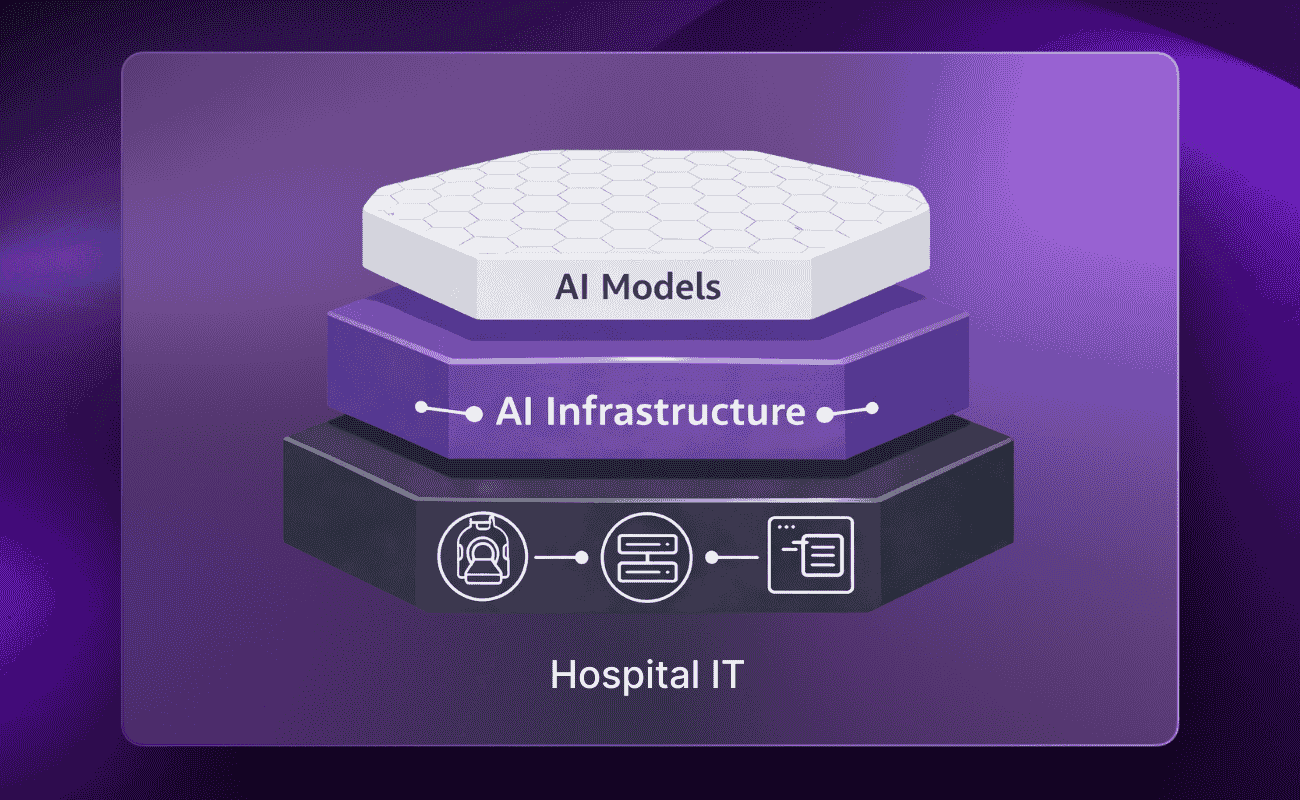

As a result, buyers have begun to distinguish between two layers:

- The AI layer: clinical capabilities powered by AI models, ranging from task-specific tools to foundation and multimodal approaches

- The infrastructure layer: the operational backbone that integrates, orchestrates, and governs AI across hospital workflows, controlling how models are deployed, combined, monitored, updated, and relied upon over time.

This distinction matters because AI value only becomes real in operations, through turnaround time, adoption, consistency, measurable outcomes, and sustained performance, and because those outcomes are only achievable when AI is governed, monitored, and managed in a compliant and safe way over time.

How to prepare for 2026

- Write your AI strategy as an operating model, not a shopping list.

- Define what must be true for AI to work in your environment: integration points, uptime expectations, workflow routing, escalation paths, audit trails, and continuous monitoring that supports safe, compliant performance over time.

- Treat “platform” claims carefully. In 2026, the market will get better at distinguishing real infrastructure depth from surface-level bundling or rebranding.

A practical question to ask vendors and platforms:

“Show me how this operates as a system over time across multiple vendors, including how AI solutions are evaluated, selected, monitored, compared, and governed after deployment.”

AI is being deployed across complex imaging environments

The shift: AI is increasingly being deployed in high-volume, multi-workflow imaging environments, where scale, variability, and performance expectations demand a more operational approach.

These environments often require teams to:

- Balance standardised AI deployment with local workflow realities

- Integrate AI across heterogeneous scanners, PACS, and reporting systems

- Measure success in operational terms such as turnaround time, adoption, and throughput

- Maintain reliability and performance as volume and usage scale

Across RSNA 2025, a consistent theme emerged: AI is increasingly embedded in the imaging production line, operating across diverse workflows, systems, and clinical environments. This raises the bar for reliability and consistency, as AI must now perform under varying protocols, infrastructure, and local practices.

How to prepare for 2026

- Design your AI operating model to accommodate multi-site variability (different scanners, protocols, workflows).

- Define success in operational terms, including adoption, workflow fit, and reporting timelines, rather than relying on accuracy alone.

- Ensure AI outputs land where radiologists actually work (not in a separate destination nobody checks).

- If you’re running across many centres, plan for central governance + local workflow flexibility.

Simple maturity test:

Confirm that if one site or department changes a protocol, AI performance and workflow stability is still maintained without a major reimplementation project.

Consolidation pressures are intensifying across the AI market

The shift: As the AI market matures, commercial strategies are evolving, with increased bundling, vertical integration, and consolidation emerging as vendors adapt to changing buyer expectations.

This is not inherently “good” or “bad.” It’s a predictable reaction when:

- Capabilities become easier to replicate

- Buyers demand proof, integration, and ROI

- Price pressure increases

- Foundation models lower the barrier for organisations to develop or adapt AI tailored to their own workflows, data, and patient populations

- AI vendors try to defend margins by bundling or expanding into platform narratives

In 2026, this likely means more

- Vendors repositioning themselves as platforms

- Exclusive partnerships

- Mergers, acquisitions, and portfolio reshaping across the AI vendor landscape

- Health systems building more in-house capabilities

How to prepare for 2026

- Plan for vendor churn, mergers, and acquisitions as a normal condition of the AI layer.

- Avoid strategies that assume any single vendor portfolio will stay constant, enough or future-proof.

- Protect your organisation’s long-term resilience by prioritising portability and continuity: the ability to swap, add, or remove AI tools without breaking workflows.

Planning for continuity in a changing AI market

Importantly, this consolidation is not a judgement on the clinical value of existing AI solutions. Many task-specific tools continue to deliver excellent results in practice. The challenge for healthcare organisations is ensuring these tools can be adopted and evolved without creating long-term operational fragility.

Foundation models and generative AI are set to play a larger role

The shift: Foundation models are changing the economics and speed of AI development, and generative AI is pushing deeper into workflow, especially reporting.

At RSNA 2025, two themes were especially visible:

Foundation models accelerate development and broaden use cases

Foundation approaches can reduce reliance on heavy labelled datasets and enable faster adaptation to new tasks and environments. That lowers the barrier to entry, speeds up innovation, and allows for in-house customization to specific workflows and tasks (which was impossible or too costly before).

Generative AI creates “horizontal” ROI

GenAI-driven reporting and workflow automation can deliver benefits across many exam types, not just a single use case. That’s strategically different from point solutions.

How to prepare for 2026

- Treat foundation models as an accelerator, not a replacement. In many settings, task-specific models (including CNN-based systems) will remain essential; especially where performance, interpretability, and regulatory maturity are well-established.

- Expect more hybrid reality: foundation models + task-specific models in the same workflow.

- Put guardrails around generative AI:

- clear human-in-the-loop expectations

- structured templates

- auditability

- ongoing evaluation and monitoring

A healthy strategy stance

Use the best tool for the job, and ensure you have the infrastructure to manage a mixed ecosystem safely.

Operational readiness and governance becomes non-negotiable

The shift: As AI moves from pilots into everyday use, it becomes part of core imaging operations, and must be run with the same operational and governance discipline as any other critical system.

This shift is being reinforced by the nature of modern AI itself. Foundation models and generative systems are not static products that can be validated once and left unchanged. They evolve quickly, adapt to context, and behave differently across workflows, sites, patient populations, and time. As a result, both operational reliability and regulatory expectations are moving beyond one-time, pre-deployment approval toward continuous post-deployment oversight.

In practice, organisations are increasingly focused on:

- Uptime and reliability as baseline expectations

- Predictable deployment and integration across complex environments

- Ongoing workflow tuning and clinician adoption

- Continuous visibility into AI performance, behaviour, and drift after go-live

- Governance mechanisms that support traceability, auditability, and accountability over time

- Structured training and change management as AI systems evolve

In other words: AI is no longer an “add-on.” It is becoming part of how imaging is delivered and regulated in practice.

How to prepare for 2026

When evaluating AI solutions and platforms, score governance and operational readiness alongside technical performance.

Ask for evidence of how systems support:

- Continuous performance monitoring across sites, models, and versions

- Transparent audit trails and reporting that can adapt to evolving regulatory expectations

- Clear escalation paths when performance changes or issues arise

- Controlled updates, versioning, and rollback without disrupting clinical workflows

- Independent oversight, rather than governance tightly coupled to a single vendor

Build internal ownership by clearly defining who is responsible for AI performance, workflow integration, and governance across the lifecycle, not just procurement or initial deployment.

A maturity signal for operational readiness:

Assess whether your organisation can maintain operational and regulatory control over AI as models evolve, workflows change, and new evidence emerges, while still having clear escalation paths and support when needed.

The 2026 playbook: how to set strategy without getting trapped

If 2026 is about AI maturity, the best strategy is not to bet everything on a single model type, a single vendor, or a single “platform” narrative.

Instead, build your AI program around three anchors:

- A stable infrastructure foundation that supports integration, routing, security, governance, and monitoring

- A flexible AI layer that can include both best-in-class task-specific tools (including traditional CNN solutions) and emerging foundation / generative approaches

- An operating model that treats evaluation, deployment, and lifecycle management as continuous rather than one-off projects

A lightweight checklist for 2026 readiness

- Can we evaluate tools on local data without heavy IT lift?

- Can we compare vendor performance to identify the best tool for a given task?

- Can we deploy and integrate AI into real workflows (where clinicians work)?

- Can we monitor performance and adoption over time?

- Can we swap vendors without breaking operations?

- Can we prove ROI beyond marketing claims?

- Do we have the support model to run this at scale?

- Can we integrate and operationalise our own in-house AI tools when needed?

- Can we govern post-market compliance at scale across vendors, tasks, and model types

.png)

.png)

.png)